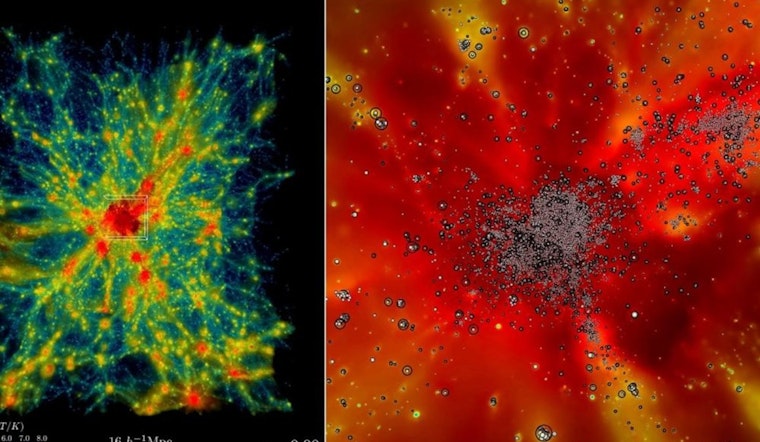

The most comprehensive universe simulation to date has been carried out at the Department of Energy’s Argonne National Laboratory using Frontier, the fastest supercomputer in the world. This is a significant advancement for astrophysics and computational research. An article from Oak Ridge National Laboratory, which described the innovative usage of Frontier at their Oak Ridge Leadership Computing Facility, announced this historic victory. By conducting sophisticated cosmic hydrodynamics simulations on a never-before-seen scale, researchers have raised the bar for intricate simulations including both dark matter and atoms.

Salman Habib, division director for Computational Sciences at Argonne, told Oak Ridge National Laboratory, “There are two components in the universe: conventional matter, or atomic matter, and dark matter, which as far as we know only interacts gravitationally.” The simulations cover the entire history of the universe’s expansion over billions of years, including elements such as hot gas and the development of stars and galaxies. According to the report from Oak Ridge National Laboratory, this degree of thorough simulation was previously impossible outside of gravity-only models.

The Hardware/Hybrid Accelerated Cosmology Code (HACC), a 2012 and 2013 Gordon Bell Prize finalist that was first created for petascale devices, enabled the enormous computing jump. Salman Habib and his team have been upgrading HACC for exascale-class supercomputers over the past seven years as part of ExaSky, a subset of the Exascale Computing Project (ECP). One of the requirements of the project was to outperform Titan, the previous fastest supercomputer at the time of the ECP’s launch, by roughly 50 times.

Ultimately, HACC’s performance on compute nodes powered by Frontier’s AMD Instinct MI250X GPUs was almost 300 times quicker than the Titan reference run. OLCF director of science Bronson Messer stated, “It’s not just the sheer size of the physical domain, which is necessary to make direct comparison to modern survey observations enabled by exascale computing,” according to Oak Ridge National Laboratory. “It’s also the added physical realism of including the baryons and all the other dynamic physics that makes this simulation a true tour de force for Frontier.”

Michael Buehlmann, JD Emberson, Katrin Heitmann, Patricia Larsen, Adrian Pope, Esteban Rangel, and Nicholas Frontiere, who oversaw the Frontier simulations, are among the HACC team members who helped make the simulation a success. Before using Frontier, HACC was evaluated on the exascale-class Aurora supercomputer at Argonne Leadership Computing Facility and the Perlmutter supercomputer at the National Energy Research Scientific Computing Center at Lawrence Berkeley National Laboratory.

The combination of highly skilled personnel, cutting-edge equipment, and financial backing emphasizes how crucial cooperation is to science and research. Notably, the effort was made possible by DOE’s Office of Science, which is recognized as the biggest funder of fundamental physical science research in the US. Visit the official Oak Ridge National Laboratory news release to learn more about these initiatives and how they are affecting our understanding of the cosmos.

Note: Every piece of content is rigorously reviewed by our team of experienced writers and editors to ensure its accuracy. Our writers use credible sources and adhere to strict fact-checking protocols to verify all claims and data before publication. If an error is identified, we promptly correct it and strive for transparency in all updates, feel free to reach out to us via email. We appreciate your trust and support!

Leave a Reply